Better formatter auto-discovery in FakeItEasy 1.13.0

A few weeks ago, I wrote about the problems that FakeItEasy’s assembly scanning was causing while it was looking for user-defined extensions. To recap, FakeItEasy was scanning all assemblies in the AppDomain and the working directory, looking for types that implemented IArgumentValueFormatter, IDummyDefinition, or IFakeConfigurator. This process was quite slow. Worse, it raised LoaderLock exceptions when debugging, and Runtime errors anytime I ran my tests using the ReSharper test runner.

At that time, I’d opened issue 130, intended to allow configuration of the scanning procedure. I’m happy to say that the issue has been closed “no fix”. Instead, I’ve contributed the fix for Issue 133 — Improved performance of assembly scanning. It doesn’t introduce any configuration options, but streamlines the scanning process.

The original behaviour was:

- find all the DLLs in the application directory

- load all the found DLLs

- find the distinct assemblies among those loaded from the directory and those already in the AppDomain

- scan each assembly and add all the types to a list

The new behaviour, heavily inspired by Nancy‘s bootstrapper-finding code, is:

- find all the DLLs in the application directory

- discard DLLs that are already part of the AppDomain – We don’t even have to crack these files open again, since we already know everything about them. Note that this check examines the absolute paths to the DLL and the loaded assembly, and will be fooled by shadow copying. So, if your test runner makes shadow copies, this time won’t be saved. I turned off shadow copying with no ill effects (and a tremendous speedup), but your mileage may vary.

- load each remaining DLL for reflection only – This may be faster, and it may not, but it has another big advantage – it doesn’t cause any of the code in the assembly to execute. (It was the execution of the assembly code that caused my LoaderLock and Runtime errors.)

- for each assembly that references FakeItEasy, fully load it – If we don’t do this, we can’t scan for all the types in the assembly because

When using the ReflectionOnly APIs, dependent assemblies must be pre-loaded or loaded on demand through the ReflectionOnlyAssemblyResolve event.

according to the error I got when I tried it. Note that excluding assemblies that don’t reference FakeItEasy means we only examine assemblies that could possibly define formatting/dummy/configuration extensions, cutting down on the scanning time.

- scan each of the following, remembering all contained types:

- the assemblies we just loaded from files,

- the AppDomain assemblies that reference FakeItEasy, and

- FakeItEasy – We need to include FakeItEasy explicitly because it defines its own formatter extensions, and since we’re otherwise only looking at assemblies that reference FakeItEasy, we’d miss it.

This new scanning behaviour has been released in the FakeItEasy 1.13.0 build, and has been a boon to me already. I’m enjoying the faster test runs (0.534 seconds for my first test, versus 1.822 (or more)) and the improved stability of the test runner. NuGet it now.

Watch your spaces – HTTP Error 500.19 – Internal Server Error

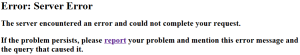

Late last week at the Day Job, a colleague came to me with a problem. The web service he was trying to hit was throwing an error he’d never seen before:

HTTP Error 500.19 – Internal Server Error

The requested page cannot be accessed because the related configuration data for the page is invalid.

I’d never seen it before either, at least not in this exact incarnation. Take a look

In case the text isn’t so clear, here are the details:

| Module | IpRestrictionModule |

|---|---|

| Notification | BeginRequest |

| Handler | WebServiceHandlerFactory-Integrated-4.0 |

| Error Code | 0x80072af9 |

| Requested URL | http://localhost:80/My.Virtual.Directory/Service.asmx |

| Physical Path | C:\inetpub\wwwroot\My.Virtual.Directory\Service.asmx |

| Logon Method | Not yet determined |

| Logon User | Not yet determined |

The errors suggested that we have problems with the configuration file, but the web.config was present (and well-formed), and there were no obvious permission problems, so it seems the file was being read. There was nothing in the event logs. Web searches yielded nothing that matched the 0x80072af9 error code or the description of the error. Even ERR.exe, recommended by Troubleshooting HTTP 500.19 Errors in IIS 7, failed me.

Fortunately, there were sibling virtual directories on the server, and they were working fine, even under the same App Pool. I knew that this virtual directory, unlike the others, restricted access to a whitelist of IP addresses. So, I changed the security/ipSecurity node’s allowUnlisted to true, just in case for some reason the clients’ IP addresses weren’t being detected properly. No change.

Frustrated, I removed the whole security node. The service worked!

So I took a closer look at the node:

<security>

<ipSecurity allowUnlisted="false">

<add ipAddress="127.0.0.1" allowed="true" />

<add ipAddress="1.2.3.4 " allowed="true" />

</ipSecurity>

</security>

Check out that “1.2.3.4” ipAddress. Now check it again. It’s actually “1.2.3.4 “, with a space at the end. (I bolded the space there, just so you wouldn’t miss it.) It seems that this messes up the IP parsing, and IIS is completely flummoxed. Remove the space, and all is well.

Fixated on Fixie – the birth of a new unit test runner

I enjoy reading about how software is made, and I like unit testing frameworks. So, when I heard about Patrick Plioi‘s new project Fixie, I rushed to check it out.

In this case, “check it out” doesn’t mean “clone the repo and dig around the source code”. Nor does it mean “install the NuGet package and build something”. Although I may do those things in the future.

Nope. It means I read Mr. Plioi’s articles about Fixie and its development. And I am having a great time. Moreso than hearing about Fixie’s features (or more often lack of features), I’m enjoying seeing Mr. Plioi’s approach to setting up a new project, including:

- prototyping the scariest integration points first

- the importance of starting out with a one-click build, for himself and for potential future contributors

- streamlining, automating, or eliminating as much ceremony as possible

- bootstrapping, and more!

The articles are well-written and articulate, and mildly funny. They’re trending a little more into the implementation of Fixie itself, rather than guiding philosophies, but I still find them interesting. And it’s worth noting that all the while I was enjoying the articles, I was thinking in the back of my head “this is a great exercise, and very instructive, but I’ve no interest in actually using Fixie—I’m content with NUnit“. Until I read DRY Test Inheritance. I really liked the low-ceremony way conventions are used to locate test setups and teardowns. It hooked me. Even though I am usually not a fan of test class inheritance and the scheme described in this article has more weight than the Default Convention.

Of course, we’ll probably never switch at the Day Job, at least not until the ReSharper test runner supports Fixie, but it might be fun to use for a small home project.

FakeItEasy’s argument formatter auto-discovery – boon and inconvenience

Hi again. At the Day Job, we’ve recently dropped Typemock Isolator and NMock2 as the mocking frameworks of choice in the products that I work on. We’ve jumped on the FakeItEasy bandwagon. So far, we’re enjoying the change. FakeItEasy is powerful enough and the concepts and syntax fit the mind pretty well. Today I’m going to focus on one feature that I’ve really enjoyed but that has been an occasional thorn in the side.

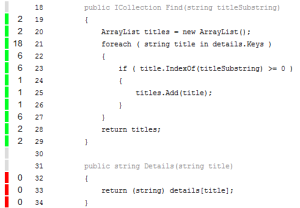

This is a feature that Patrik Hägne has blogged about before, but that I think is still not well known. I found it accidentally, and have benefited from it. You can provide custom argument renderers to improve the messages you get when FakeItEasy detects an error due to missing or mismatched calls. Check out Mr. Hägne’s post for the full details, but if I may be so bold as to rip off some of his examples, here’s the gist (original meaning, not fancy github one).

Define a class that extends ArgumentValueFormatter<Person> (where Person is a class in your project), override GetStringValue with something that renders a Person, and FakeItEasy errors that need to talk about a Person change from this

Assertion failed for the following call:

'FakeItEasy.Examples.IPersonRepository.Save()'

Expected to find it exactly never but found it #1 times among the calls:

1. 'FakeItEasy.Examples.IPersonRepository.Save(

personToSave: FakeItEasy.Examples.Person)'

to

Assertion failed for the following call:

'FakeItEasy.Examples.IPersonRepository.Save()'

Expected to find it exactly never but found it #1 times among the calls:

1. 'FakeItEasy.Examples.IPersonRepository.Save(

personToSave: Person named Patrik Hägne,

date of birth 1977-04-05 (12227,874689919 days old).)'

It’s very easy to use, and quite helpful. However, lately I’ve had a few difficulties with some test projects and have tracked it back to an aspect of this feature. Specifically, for certain very large projects

- My test fixtures are taking a long time to start up – several extra seconds while waiting for the first test to run. Specifically, the delay was happening in my first

A.Fakecall. - During this delay, several “LoaderLock was detected” popups appear, which have no obvious ill effect, but are very annoying, and

- Finally, after a recent upgrade of dependent libraries, when I run the tests using the Resharper test runner, I see a “Microsoft Visual C++ Runtime Library Runtime Error!” in JetBrains.ReSharper.TestRunner.CLR4.exe. It claims that I’m trying to “use MSIL code from this assembly during native code initialzation”. The tests continue to run, but the TestRunner process never exits, and needs to be killed before test can be run again.

The reasons all these things are happening during the first FakeItEasy call is due to the way that FakeItEasy finds the custom ArgumentValueFormatter implementations. It scans all available assemblies, looking for any implementations. In this case, “all available assemblies” means every assembly in the AppDomain as well as all *.dll files in the current directory. This actually makes the feature a little more powerful than Mr. Hägne indicated—you can define your extensions in other assemblies than the test project’s. In fact, this is how FakeItEasy finds its own built-in ArgumentValueFormatters (one for null, one for System.String, and one for any System.Object that doesn’t have its own extensions). FakeItEasy is in the AppDomain, so its extensions are located by the scan. One benefit of doing such a wide scan is that it’s possible to define the formatter extension classes in a shared library that can be used across test projects.

It’s the scanning that’s causing my pain. First, some of the solutions at the Day Job are quite large, with dozens of assemblies in the test project’s AppDomain and build directory. Even if everything went well, it would take seconds to load and scan all those assemblies. Second, some of the DLLs in the directory aren’t under our control. Some aren’t managed. Some don’t play well with others. It’s these ones that are causing the other problems I mentioned above. Loading these assemblies causes them to be accessed in ways that they were never planned to be, which causes the LoaderLocks and Runtime Error.

What now? We’re investigating the assemblies we’re using to see if we can’t access them in a better way, but that’s probably going to be a slow operation, and one that may not bear fruit. In the meantime, I’ve forked FakeItEasy and am using the custom build in the one project that it was causing the most pain. The custom version only loads extensions from the FakeItEasy assembly. It’s kind of a terrible hack, and means that we can’t define custom extensions, but we hadn’t for that project anyhow, so it’s not yet causing pain. On the brighter side, there are no more errors or popups, and the tests start much more quickly.

Longer term, I’ve created FakeItEasy issue 130 to make the extension location a little more flexible. Once accepted and implemented, it will give the user control over how extension classes are located during FakeItEasy startup. (Then I can resume using the vanilla FakeItEasy at the Day Job.) If you’re curious, pop on over and take a look.

ReportGenerator indexing your whole drive? Check the case of your fullPaths.

[Update on 2013-06-22: I should’ve mentioned this a while ago, but the issue and patch I submitted were accepted and built into ReportGenerator 1.7.3.0, so if you have anything newer, you should be good.]

Recently I was working on a project at the Day Job, using OpenCover 1.7.1.0 and ReportGenerator 4.0.804 to report my test coverage, as is my wont, when the report generation started taking figuratively forever. Investigating, I saw something like

found report files: D:/sandbox/project/src/buildlogs/temp_test_coverage/Project.UnitTest.coverage.xml Loading report 'D:\sandbox\project\src\buildlogs\temp_test_coverage\Project.UnitTest.coverage.xml' Preprocessing report Indexing classes in directory 'D:\sandbox\project\src\Module1\SubPath\' Added coverage information of 370/370 auto properties to module 'Module1' Indexing classes in directory 'D:\'

My D: drive isn’t the hugest, but it’s big enough, so that explained the delay. And of course, I certainly didn’t want anything above D:\sandbox\project\src indexed.

I took a peek at my .coverage.xml file and the ReportGenerator code and until I found the offending lines

<Module hash="9A-A3-0A-C0-1D-57-BA-2A-C2-D4-5B-9E-08-DE-BD-2D-46-04-AF-32">

<FullName>D:\Sandbox\project\src\Module\UnitTest\bin\Release\Module.dll</FullName>

<ModuleName>Module</ModuleName>

<Files>

…

<File uid="803" fullPath="D:\sandbox\project\src\Module\File1.cs" />

<File uid="806" fullPath="D:\Sandbox\project\src\Module\File2.cs" />

<File uid="808" fullPath="D:\sandbox\project\src\Module\File3.cs" />

…

Note the “Latin capital letter S” at the beginning of “Sandbox” on line 7. All the other lines had a “Latin small letter S”.

When ReportGenerator goes looking for *.cs files to scan, it starts at the directory whose name is the longest common prefix of all the fullPaths. Because “S” isn’t “s”, it came up with “D:\”.

I submitted an issue on the ReportGenerator CodePlex project, so maybe we’ll see a fix soon.

Of course I wondered “Why does the S differ for that entry?” but I figured I’d look at one thing at a time, and locating the fix for ReportGenerator was quicker.

Moving LibraryHippo to Python 2.7 – OpenID edition

Now that Google has announced that Python 2.7 is fully supported on Google App Engine, I figured I should get my act in gear and make convert LibraryHippo over. I’d had a few aborted attempts earlier, but this time things are going much better.

How We Got Here – Cloning LibraryHippo

One of the requirements for moving to Python 2.7 is that the app must use the High Replication Datastore, and LibraryHippo did not. Moreover, the only way to convert to the HRD is to copy your data to a whole new application. So I bit the bullet, and made a new application from the LibraryHippo source.

When you set up a new application, you have the option of allowing federated authentication via OpenID. I’d wanted to do this for some time, so I thought, “While I’m changing the datastore, template engine, and version of Python under the hood, why not add a little complexity?”, and I picked it.

The Simplest Thing That Should Work – Google as Provider

In theory, LibraryHippo should be able to support any OpenID provider, but I wanted to start with Google as provider for a few reasons:

- concentrating one one provider would get the site running quickly and I could add additional providers over time

- I need to support existing users – they’ve already registered with App Engine using Google, and I want things to keep working for them, and

- I wanted to minimize my headaches – I figure, if an organization supports both an OpenID client feature and an OpenID provider, they must work together as well as any other combination.

Even though there’s been official guidance around using OpenID in App Engine since mid-2010, I started with Nick Johnson’s article for an overview – he’s never steered me wrong before. And I’m glad I did. While the official guide is very informative, Nick broke things down really well. To quote him,

Once you’ve enabled OpenID authentication for your app, a few things change:

- URLs generated by create_login_url without a federated_identity parameter specified will redirect to the OpenID login page for Google Accounts.

- URLs that are protected by “login: required” in app.yaml or web.xml will result in a redirect to the path “/_ah/login_required”, with a “continue” parameter of the page originally fetched. This allows you to provide your own openid login page.

- URLs generated by create_login_url with a federated_identity provider will redirect to the specified provider.

That sounded pretty good – the existing application didn’t use login: required anywhere, just create_login_url (without a federated_identity, of course).

So, LibraryHippo should be good to go – every time create_login_url is used to generate a URL, it’ll send users to Google Accounts. I tried it out.

It just worked, almost. When a not-logged-in user tried to access a page that required a login, she was directed to the Google Accounts page. There were cosmetic differences, but I don’t think they’re worth worrying about:

standard Google login page |

federated Google login page |

After providing her credentials, the user was redirected to a page that asked her if it was okay for LibraryHippo to know her e-mail address. After that approval was granted, it was back to the LibaryHippo site and everything operated as usual.

However, login: admin is still a problem. I really shouldn’t have been surprised by this, but login: admin seems to do the same thing that login: required does – redirect to /_ah/login_required, which is not found.

This isn’t a huge problem – it only affects administrators (me), and I could workaround by visiting a page that required any kind of login first, but it still stuck in my craw.

Fortunately, the fix is very easy – just handle /_ah/login_required. I ripped off Nick’s OpenIdLoginHandler, only instead of offering a choice of providers using users.create_login_url, this one always redirects to Google’s OpenId provider page. With this fix, admins are able to go directly from a not-logged-in state to any admin required page.

class OpenIdLoginHandler(webapp2.RequestHandler):

def get(self):

continue_url = self.request.GET.get('continue')

login_url = users.create_login_url(dest_url=continue_url)

self.redirect(login_url)

...

handlers = [ ...

('/_ah/login_required$', OpenIdLoginHandler),

... ]

Using Other Providers

With the above solution, LibraryHippo’s authentication system has the same functionality as before – users can login with a Google account. It’s time to add support for other OpenID providers.

username.myopenid.com

I added a custom provider picker page as Nick suggested, and tried to login with my myOpenID account, with my vanity URL as provider – blair.conrad.myopenid.com. The redirect to MyOpenID worked just as it should, and once I was authenticated, I landed back at LibraryHippo, at the “family creation” page, since LibraryHippo recognized me as a newly-authenticated user, with no history.

myopenid.com

Buoyed by my success, I tried again, this time using the “direct provider federated identity” MyOpenID url – myopenid.com. It was a complete disaster.

Once MyOpenID had confirmed my identity, and I was redirected back to the LibraryHippo application, App Engine threw a 500 Server Error. There’s nothing in the logs – just the horrible error on the screen. In desperation, I stripped down my login handler to the bare minimum, using the example at Using Federated Authentication via OpenID in Google App Engine as my guide. I ended up with this class that reproduces the problem:

class TryLogin(webapp2.RequestHandler):

def get(self):

providers = {

'Google' : 'www.google.com/accounts/o8/id',

'MyOpenID' : 'myopenid.com',

'Blair Conrad\'s MyOpenID' : 'blair.conrad.myopenid.com',

'Blair Conrad\'s WordPress' : 'blairconrad.wordpress.com',

'Yahoo' : 'yahoo.com',

'StackExchange': 'openid.stackexchange.com',

}

user = users.get_current_user()

if user: # signed in already

self.response.out.write('Hello <em>%s</em>! [<a href="%s">sign out</a>]' % (

user.nickname(), users.create_logout_url(self.request.uri)))

else: # let user choose authenticator

self.response.out.write('Hello world! Sign in at: ')

for name, uri in providers.items():

self.response.out.write('[<a href="%s">%s</a>]' % (

users.create_login_url(dest_url= '/trylogin', federated_identity=uri), name))

...

handlers = [

('/trylogin$', TryLogin),

('/_ah/login_required$', OpenIdLoginHandler),

...

]

Interestingly, both Yahoo! and WordPress work, but StackExchange does not. If it weren’t for Yahoo!, I’d guess that it’s the direct provider federated identities that give App Engine problems (yes, Google is a direct provider, but I consider it to be an exception in any case).

Next steps

For now, I’m going to use the simple “just Google as federated ID provider” solution that I described above. It seems to work, and I’d rather see if I can find out why these providers fail before implementing an OpenID selector that excludes a few providers. Also, implementing the simple solution will allow me to experiment with federated IDs on the side, since I don’t know how e-mail will work with federated IDs, or how best to add federated users as families’ responsible parties. But that’s a story for another day.

Best all-around .NET coverage tool – OpenCover

This is the gala awards show, where my chosen coverage tool is announced.

If you’ve come this far, you’ve probably already read the title, and it won’t surprise you to learn that I’ve chosen OpenCover. It offered the best fit for my requirements – the only areas where I found it lacking were in the “nice to haves”. Witness:

- OpenCover is pretty easy to run from the command line – second only to NCover.

- It can (with the help of ReportGenerator) generated coverage reports in XML and HTML.

- OpenCover has an integrated auto-deploy, so it can be bundled with the source tree and new developers or build servers just work – dotCover has no such option, and I was not able to use NCover this way.

- I’ve been able to link with TypeMock Isolator with little trouble, and the new Isolator may obviate the need for my small workaround.

- It’s free. Aside from the obvious benefit, it’s nice to not have to count licenses when adding developers and/or build server nodes.

- There’s no GUI integration, but this was a nice to have. If some developer is absolutely dying to have this, my boss’s boss has indicated that money could be available for individual licenses of something like dotCover.

- There’s no support for integrating with IIS. We don’t need this right now, so that’s okay. Again, if we one or two developers find a need, we have the option of buying a license of some other tool. Even better, support may be coming soon.

After considering OpenCover’s strengths in the areas I absolutely needed, and its weaknesses, which all appear to be in areas that I care a little less about, I recommended it the boss’s boss, who was agreed with the assessment and was happy to keep a little money in his pocket for now.

So, I grabbed 2.0.802, incorporated it into one product’s build, and out popped coverage numbers. Very exciting. I did notice a few things, though:

- Branch coverage has been added since I last evaluated the product!

- One fairly complicated integration-style testfixture is not runnable under OpenCover – the class tested creates a background thread and starting the thread results in a

System.AccessViolationException. I was unable to determine the cause of this, and have temporarily removed the test from coverage, instead executing it with NUnit directly. I’m going to continue investigating this problem. - Since I’m XCopy deploying, I was bitten by the dependency on the Microsoft Visual C++ 2010 Redistributable Package – I ended up including the DLLs in my imported bundle, and all was well, but I worry a little about the stability of this solution.

- The time taken to execute our tests (there are over 5000, and many hit a database) increased from about 7 minutes to about 8. This is an acceptable degradation, since the test run isn’t the bottleneck in our build process.

- The number of “Cannot instrument as no PDB could be loaded” messages is daunting. I’m hoping that things will be improved once I get a build that contains a fix for issue 40.

Hasty Impressions: NCover

Today I’m looking at my fourth candidate: NCover.

I tried NCover 3.4.18.6937.

The Cost

NCover Complete is $479 plus $179 for a 1-year subscription (which gives free version updates). I thought this was a little steep. NCover Classic is $199/$99. I looked at NCover Complete, because that’s the kind of trial version they give out. Also, the feature set for Classic was too similar to that offered by other tools that cost less. Check out the feature comparison, if you like.

Support

I haven’t had enough problems to really stress the support network, but I will say this – the NCover chaps are really keen on keeping in touch with people who have trial copies of the program. I’ve received 3 separate e-mails from my assigned NCover rep in the 2 weeks since I first installed my trial copy. I replied to one of these, asking for a clarification on the VS integration (see below), and got a speedy response.

It’s nice to see such a high level of customer support, but I do feel just a little bit smothered…

VS integration

The best advice from the NCover folks is to create an external tool to launch NCover. That’s an okay solution if you want to run all the unit tests in a project and profile them, but it lacks flexibility. Then to actually look at the report, you have to launch the NCover Explorer and load the report.

There’s additional advice at the end of the Running NCover from Visual Studio video – if you want a more integrated Visual Studio experience, you should obtain TestDriven.Net. That probably works well enough, but I’m not wild about paying an additional $189 per head (roughly) for a test runner that (in my opinion, and excepting the NCover integration of course) is a less robust solution than the one that comes bundled with ReSharper.

Oh. There’s one more feature that I found – once you are examining a coverage report, you can Edit in VS.NET, which opens the appropriate file in Visual Studio. This is somewhat convenient, but doesn’t warp you to the correct line, which is a bit of a letdown.

Command Line Execution

The command line offers many and varied options for configuring the coverage run. Here’s a sample invocation:

NCover.Console.exe //exclude-assemblies BookFinder.Tests //xml ..\..\coverage.nccover nunit-console.exe bin\debug\BookFinder.Tests.dll

Upon execution, NCover tells me this:

Adding the '/noshadow' argument to the NUnit command line to ensure NCover can gather coverage data. To prevent this behavior, use the //literal argument.

I really like that it defaults to passing the recommended /noshadow to NUnit. The // switches are also a good touch – it makes providing arguments to the executable being covered a lot easier. These features make the command line invocation the best I’ve seen among coverage tools.

GUI Runner

The GUI runner looks just like a GUI wrapper on top of the command line options – they appear to support the same level of configuration. After the tests have been run, the NCoverExplorer allows one to browse the results and to save a report as XML or HTML.

XML Report

Reports are generated either from the GUI runner or by using the NCover.Reporting executable, which has a plethora of options for choosing XML or HTML reports of various flavours.

XML reports contain all the information you might want to summarize for inclusion in build output, but they’re hard to understand. Witness:

<stats acp="95" afp="80" abp="95" acc="20" ccavg="1.5" ccmax="5" ex="0" ei="1" ubp="12" ul="40" um="10" usp="39" vbp="63" vl="89" vsp="105" mvc="18" vc="2" vm="22" svc="120">

If you stare at this long enough (and correlate with a matching HTML report), you figure out that this means that there are

- 39 unvisited sequence points, and

- 105 visited sequence points

along with various other stats, so using attribute extraction and Math, we could see that 105/144 or 72.9% of the sequence points are covered.

It’s odd that there are many more reports available for HTML than XML. Notably absent from the XML offering: “Summary”. What is it about summaries that make them unsuitable for rendering as XML when HTML is fine?

Reports of Auto-Deploy

My Support Guy explained that you can xcopy deploy NCover using the //reg flag, but I did not find any documentation on how to do this. Support Guy claims there is an “honour system” kind of licensing model that supports this, but the trial copy I had did not work this way. I eventually abandoned this line of investigation.

Mature Isolator Support

From Visual Studio, under the Typemock menu, configure Typemock Isolator to Link with NCover&nsbsp;3.0.

When using the TypeMockStart MSBuild task, use

<TypeMockStart Link="NCover3.0" ProfilerLaunchedFirst="true"/>

and it just works, assuming you have TypeMock Isolator installed or set to auto-deploy.

IIS

IIS coverage is available, simply by selecting it from the GUI runner options or from the command line using the //iis switch. Other Windows Services can be covered in the same manner. Note though, that these features are only available in the Complete flavour of NCover 3.0.

Sequence Point coverage

Supported, as well as branch point coverage and other metrics, including cyclomatic complexity. Nice options to have, although probably a little advanced for my team’s current needs and experience.

Conclusion

Pros:

- sequence point and branch coverage

- large feature set, including trends, cyclomatic complexity analysis, and much much more

- commercial product with strong support

- report merging

- easy IIS profiling

- supports Isolator

Cons:

- costly

- weak IDE integration

- inconsistent (comparing XML to HTML) report offerings

- confusing auto-deploy

I expected to be blown away by NCover—from all reports, it’s the Cadillac of .NET coverage tools. After demoing it, I figured I’d end up desperately trying to make a case to the Money Guy to shell out hundreds of dollars per developer (and build server), but this did not happen.

While NCover definitely has lots of features, it’s lacking some pretty important ones as well, notably IDE integration. Other features just weren’t as I expected – the cornucopia of report types is impressive, but overkill for a team just starting out, and many of the report types aren’t available in XML and/or are very minor variations on other report types.

Ultimately, I don’t see what NCover offers to justify its price tag, especially across a large team. If ever I felt a need to have one of the specialized report, I’d consider obtaining a single license for tactical use, but I can’t imagine any more than that.

Hasty Impressions: OpenCover

Today I’m looking at my third candidate: OpenCover.

OpenCover is developed by Shaun Wilde. He was a developer on (and is the only remaining maintainer of) PartCover. He’s used what he learned working on PartCover to develop OpenCover, but OpenCover is a new implementation, not a port.

I tried OpenCover 1.0.514. Since I downloaded a couple weeks ago there have been 3 more releases, with the 1.0.606 release promising a big performance improvement.

The Cost

Free! And you can get the source.

VS integration

None that I can find.

Command Line Execution

Covering an application from the command line is easy, and reminiscent of using PartCover the same way. I used this command to see what code my BookFinder unit tests exercised:

OpenCover.Console.exe -arch:64 -register target:nunit-console.exe -targetargs:bin\debug\BookFinder.Tests.dll -output:..\..\opencover.xml -filter:+[BookFinder.Core]*

Let’s look at that.

-arch:64– I’m running on a 64-bit system. I didn’t get any results without this.-register– I’m auto-deploying OpenCover. More on that later.-target:nunit-console.exe– I like NUnit-targetargs:bin\debug\BookFinder.Tests.dll– arguments to NUnit to tell it what assembly to test, and how.-output:..\..\opencover.xml– where to put the coverage results. This file is not a report – it’s intended for machines to read, not humans.-filter:+[BookFinder.Core]*– BookFinder.Core is the only assembly I was interested in – it holds the business logic.

GUI Runner

There isn’t one, but I have to wonder if there won’t be. Otherwise, why call the command line coverer OpenCover.Console.exe?

XML Report

OpenCover doesn’t generate a human-readable report. Instead, you can postprocess the coverage output. ReportGenerator is the recommended tool, and it works like a charm.

ReportGenerator.exe .\opencover.xml XmlReport Xml

generates an XML report in the Xml directory. The summary looks like this:

<?xml version="1.0" encoding="utf-8"?>

<CoverageReport scope="Summary">

<Summary>

<Generatedon>2011-08-05-2011-08-05</Generatedon>

<Parser>OpenCoverParser</Parser>

<Assemblies>1</Assemblies>

<Files>5</Files>

<Coverage>71.6%</Coverage>

<Coveredlines>126</Coveredlines>

<Coverablelines>176</Coverablelines>

<Totallines>495</Totallines>

</Summary>

<Assemblies>

<Assembly name="BookFinder.Core.DLL" coverage="71.6">

<Class name="BookFinder.BookDepository" coverage="85.7" />

<Class name="BookFinder.BookListViewModel" coverage="50" />

<Class name="BookFinder.BoolProperty" coverage="50" />

<Class name="BookFinder.BoundPropertyStrategy" coverage="0" />

<Class name="BookFinder.ListProperty" coverage="75" />

<Class name="BookFinder.Property" coverage="100" />

<Class name="BookFinder.StringProperty" coverage="100" />

<Class name="BookFinder.ViewModelBase" coverage="81" />

</Assembly>

</Assemblies>

</CoverageReport>

ReportGenerator also generates Html and LaTeX output, with a “summary” variant for each of the three output types.

The XML report would be most useful for inclusion in build result reports, but I found the HTML version easy to use to examine coverage results down to the method level.

I appreciate the coverage count by each of the lines – not as fancy as dotCover’s “which tests cover this”, but it could be a helpful clue when you’re trying to decide what you need to do to improve your coverage.

Joining Coverage Runs

Perhaps your test are scattered in space or time and you want to get an overview of all the code that’s covered by them. OpenCover doesn’t really do anything special for you, but ReportGenerator has your back. Specify multiple input files on the command line, and the results will be aggregated and added to a comprehensive report:

ReportGenerator.exe output1.xml;output2.xml;output3.xml XmlReport Xml

DIY Auto-Deploy

There’s no built-in auto-deploy for OpenCover. However, I made my own auto-deployable package like so:

- install OpenCover

- copy the

C:\Program Files (x86)\OpenCoverdirectory somewhere – call this your package directory - uninstall OpenCover – you won’t need it any more

Then I just made sure my coverage build step

- knew where the OpenCover package directory was (for the build system at the Day Job, I added it to our “subscribes”)

- used the

-registerflag mentioned above to register OpenCover before running the tests

That’s it. No muss, no fuss. I did a similar (but easier, since there’s no registration needed) trick with ReportGenerator, and all of a sudden I have a no-deploy system.

In less than an hour’s work, I could upgrade a project so the build servers and all the developers could run a coverage target, with no action on their part, other than pulling the updated source tree and building. (Which is pretty much what the build server does all day long anyhow…)

DIY (for now) Coverage with Isoloator

Isoloator and OpenCover don’t work together out of the box, but thanks to advice I got from Igal Tabachnik, Typemock employee, it was not hard to change this.

Isolator’s supported coverage tools are partly configurable. There is a typemockconfig.xml under the Isolator install directory – typically %ProgramFiles (x86)%\Typemock\Isoloator\6.0 (or %ProgramFiles%, I suppose). Mr. Tabachnik had me add

<Profiler Name="OpenCover" Clsid="{1542C21D-80C3-45E6-A56C-A9C1E4BEB7B8}" DirectLaunch="false">

<EnvironmentList />

</Profiler>

to the ProfilerList element, and everything meshed. His StackOverflow answer provides full details and suggests that official support for OpenCover will be added to Isolator.

IIS

I can’t find any special IIS support. I’m not saying OpenCover can’t be used to cover an application running in IIS, only that I didn’t find any help for it. I may investigate this later.

Sequence Point coverage

OpenCover counts sequence points, not statements. Yay!

Conclusion

Pros:

- free

- open source

- active project

- XML/HTML/LaTeX reports (via ReportGenerator)

- report merging (via ReportGenerator)

- Isolator support is easy to add (and may be included in future Isolators)

- auto-deploy package is easy to make

Cons:

- no IDE integration

- no help with IIS profiling

I really like OpenCover. It’s easy to use, relatively full-featured, and free. In a work environment, where there’s a tonne of developers who want the in-IDE profiling experience, it may not be the best bet, but I’d use it for my personal .NET projects in a flash.

Hasty Impressions: PartCover

Today I’m looking at my second candidate: PartCover.

Technical stuff

PartCover has a GUI runner as well as a command-line mode. It integrates with Isolator, but doesn’t offer any help for those wanting to profile IIS-hosted applications.

There are some XSL files provided that allow one to generate HTML reports, but probably the better way is to use ReportGenerator to make HTML or XML reports.

PartCover claims to be auto-deployable, but I did not try this.

Project Concerns

The hardest thing about working with PartCover is learning about PartCover – finding definitive information about the project’s state is quite difficult. Searching with Google finds the SourceForge project which contains a note to see latest news on the PartCover blog, which hasn’t been updated since 17 June 2009. Back at SourceForge, you can download a readme written by Shaun Wilde, which says that he’s the last active developer and has moved development to a GitHub project.

At last! A project with recent (26 June 2011) updates. Unfortunately, my trials did not end here. I tried a number of versions, each with their own quirks. Unfortunately, I did not keep as careful track of which version had which problem as I should, and can’t say which version (from either GitHub or SourceForge) had which problems, but I can describe the problems.

At first I thought things were working really well, but then noticed that I had abnormally high coverage levels on my projects – one legacy project that I knew had about 5% coverage was registering as over 20%!

I looked at one assembly’s summary and found 6 classes with 0% coverage and one with 80%, and the assembly was registering an 80%. It turns out that completely uncovered classes were not counting against the total.

I tried other versions, with either the same results, or failures to run altogether. Ultimately, I gave up.

A Successor

It turns out that PartCover has a successor of sorts – Shaun Wilde, the last surviving maintainer of PartCover, has started his own coverage tool – OpenCover. It already seems be a viable PartCover replacement, and is in active development, so I’ll be checking it out as a free, non-IDE-integrated coverage tool.

Conclusion

Pros:

- free!

- XML/HTML via ReportGenerator

- report merging via ReportGenerator

- Isolator support

- auto-deployable (reported)

- sequence point coverage

Cons:

- no IDE integration

- no special IIS support

- forked implementations, each with their own warts

- not quite abandoned, but not a lot of interest behind the project

Until I noticed the high coverage levels, I didn’t mind PartCover. I figured its lack of price and its Isolator support made it a viable candidate. Unfortunately, the high coverage reports and other problems soured me on the deal, as did the lack of maintenance. I’m going to look at OpenCover instead.

RSS - Posts

RSS - Posts